mirror of

https://github.com/AbdBarho/stable-diffusion-webui-docker.git

synced 2026-03-03 11:53:58 +01:00

commit

8e5ac35102

11

.dockerignore

Normal file

11

.dockerignore

Normal file

|

|

@ -0,0 +1,11 @@

|

|||

.devscripts

|

||||

.github

|

||||

.vscode

|

||||

.idea

|

||||

.editorconfig

|

||||

.gitattributes

|

||||

.gitignore

|

||||

*.md

|

||||

docs

|

||||

data

|

||||

output

|

||||

2

.gitignore

vendored

2

.gitignore

vendored

|

|

@ -4,3 +4,5 @@

|

|||

# VSCode specific

|

||||

*.code-workspace

|

||||

/.vscode

|

||||

.idea

|

||||

TODO.md

|

||||

|

|

|

|||

1

data/.gitignore

vendored

1

data/.gitignore

vendored

|

|

@ -2,3 +2,4 @@

|

|||

/config

|

||||

/embeddings

|

||||

/models

|

||||

/states

|

||||

|

|

|

|||

|

|

@ -1,6 +1,4 @@

|

|||

x-base_service: &base_service

|

||||

ports:

|

||||

- "${WEBUI_PORT:-7860}:7860"

|

||||

volumes:

|

||||

- &v1 ./data:/data

|

||||

- &v2 ./output:/output

|

||||

|

|

@ -8,11 +6,16 @@ x-base_service: &base_service

|

|||

tty: true

|

||||

deploy:

|

||||

resources:

|

||||

# limits:

|

||||

# cpus: 8

|

||||

# memory: 48G

|

||||

reservations:

|

||||

# cpus: 4

|

||||

# memory: 24G

|

||||

devices:

|

||||

- driver: nvidia

|

||||

device_ids: ['0']

|

||||

capabilities: [compute, utility]

|

||||

capabilities: [compute, utility, gpu]

|

||||

|

||||

name: webui-docker

|

||||

|

||||

|

|

@ -28,6 +31,8 @@ services:

|

|||

profiles: ["auto"]

|

||||

build: ./services/AUTOMATIC1111

|

||||

image: sd-auto:78

|

||||

ports:

|

||||

- "${WEBUI_PORT:-7860}:7860"

|

||||

environment:

|

||||

- CLI_ARGS=--allow-code --medvram --xformers --enable-insecure-extension-access --api

|

||||

|

||||

|

|

@ -35,6 +40,8 @@ services:

|

|||

<<: *automatic

|

||||

profiles: ["auto-cpu"]

|

||||

deploy: {}

|

||||

ports:

|

||||

- "${WEBUI_PORT:-7860}:7860"

|

||||

environment:

|

||||

- CLI_ARGS=--no-half --precision full --allow-code --enable-insecure-extension-access --api

|

||||

|

||||

|

|

@ -43,13 +50,34 @@ services:

|

|||

profiles: ["comfy"]

|

||||

build: ./services/comfy/

|

||||

image: sd-comfy:7

|

||||

volumes:

|

||||

- ./data/models:/opt/comfyui/models

|

||||

- ./data/config/comfy/custom_nodes:/opt/comfyui/custom_nodes

|

||||

- ./output/comfy:/opt/comfyui/output

|

||||

- ./data/models/configs:/opt/comfyui/user/default/

|

||||

ports:

|

||||

- "${COMFYUI_PORT:-7861}:7861"

|

||||

environment:

|

||||

- COMFYUI_PATH=/opt/comfyui

|

||||

- COMFYUI_MODEL_PATH=/opt/comfyui/models

|

||||

- CLI_ARGS=

|

||||

|

||||

# - TORCH_FORCE_NO_WEIGHTS_ONLY_LOAD=1

|

||||

|

||||

comfy-cpu:

|

||||

<<: *comfy

|

||||

profiles: ["comfy-cpu"]

|

||||

deploy: {}

|

||||

ports:

|

||||

- "${COMFYUI_PORT:-7861}:7861"

|

||||

environment:

|

||||

- CLI_ARGS=--cpu

|

||||

|

||||

auto-full:

|

||||

<<: *base_service

|

||||

profiles: [ "full" ]

|

||||

build: ./services/AUTOMATIC1111

|

||||

image: sd-auto:78

|

||||

environment:

|

||||

- CLI_ARGS=--allow-code --xformers --enable-insecure-extension-access --api

|

||||

ports:

|

||||

- "${WEBUI_PORT:-7860}:7860"

|

||||

|

|

|

|||

72

docs/FAQ.md

Normal file

72

docs/FAQ.md

Normal file

|

|

@ -0,0 +1,72 @@

|

|||

# General

|

||||

|

||||

Unfortunately, AMD GPUs [#63](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/63) and MacOs [#35](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/35) are not supported, contributions to add support are very welcome.

|

||||

|

||||

## `auto` exists with error code 137

|

||||

|

||||

This is an indicator that the container does not have enough RAM, you need at least 12GB, recommended 16GB.

|

||||

|

||||

You might need to [adjust the size of the docker virtual machine RAM](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/296#issuecomment-1480318829) depending on your OS.

|

||||

|

||||

## Dockerfile parse error

|

||||

```

|

||||

Error response from daemon: dockerfile parse error line 33: unknown instruction: GIT

|

||||

ERROR: Service 'model' failed to build : Build failed

|

||||

```

|

||||

Update docker to the latest version, and make sure you are using `docker compose` instead of `docker-compose`. [#16](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/16), also, try setting the environment variable `DOCKER_BUILDKIT=1`

|

||||

|

||||

## Unknown Flag `--profile`

|

||||

|

||||

Update docker to the latest version, and see [this comment](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/165#issuecomment-1296155667), try setting the environment variable mentioned in the previous point.

|

||||

|

||||

## Output is a always green image

|

||||

use `--precision full --no-half`. [#9](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/9)

|

||||

|

||||

|

||||

## Found no NVIDIA driver on your system even though the drivers are installed and `nvidia-smi` shows it

|

||||

|

||||

add `NVIDIA_DRIVER_CAPABILITIES=compute,utility` and `NVIDIA_VISIBLE_DEVICES=all` to container can resolve this problem [#348](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/348#issuecomment-1449250332)

|

||||

|

||||

|

||||

---

|

||||

|

||||

# Linux

|

||||

|

||||

### Error response from daemon: could not select device driver "nvidia" with capabilities: `[[gpu]]`

|

||||

|

||||

Install [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html) and restart the docker service [#81](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/81)

|

||||

|

||||

|

||||

### `docker compose --profile auto up --build` fails with `OSError`

|

||||

|

||||

This might be related to the `overlay2` storage driver used by docker overlayed on zfs, change to the `zfs` storage driver [#433](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/433#issuecomment-1694520689)

|

||||

|

||||

---

|

||||

|

||||

# Windows / WSL

|

||||

|

||||

|

||||

## Build fails at [The Shell command](https://github.com/AbdBarho/stable-diffusion-webui-docker/blob/5af482ed8c975df6aa0210225ad68b218d4f61da/build/Dockerfile#L11), `/bin/bash` not found in WSL.

|

||||

|

||||

Edit the corresponding docker file, and change the SHELL from `/bin/bash` to `//bin/bash` [#21](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/21), note: this is a hack and something in your wsl is messed up.

|

||||

|

||||

|

||||

## Build fails with credentials errors when logged in via SSH on WSL2/Windows

|

||||

You can try forcing plain text auth creds storage by removing line with "credStore" from ~/.docker/config.json (in WSL). [#56](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/56)

|

||||

|

||||

## `unable to access 'https://github.com/...': Could not resolve host: github.com` or any domain

|

||||

Set the `build/network` of the service you are starting to `host` [#114](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/114#issue-1393683083)

|

||||

|

||||

## Other build errors on windows

|

||||

* Make sure:

|

||||

* Windows 10 release >= 2021H2 (required for WSL to see the GPU)

|

||||

* WSL2 (check with `wsl -l -v`)

|

||||

* Latest Docker Desktop

|

||||

* You might need to create a [`.wslconfig`](https://docs.microsoft.com/en-us/windows/wsl/wsl-config#example-wslconfig-file) and increase memory, if you have 16GB RAM, set the limit to something around 12GB, [#34](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/34) [#64](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/64)

|

||||

* You might also need to [force wsl to allow file permissions](https://superuser.com/a/1646556)

|

||||

|

||||

---

|

||||

|

||||

# AWS

|

||||

|

||||

You have to use one of AWS's GPU-enabled VMs and their Deep Learning OS images. These have the right divers, the toolkit and all the rest already installed and optimized. [#70](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/70)

|

||||

1

docs/Home.md

Normal file

1

docs/Home.md

Normal file

|

|

@ -0,0 +1 @@

|

|||

Welcome to the stable-diffusion-webui-docker wiki!

|

||||

25

docs/Podman-Support.md

Normal file

25

docs/Podman-Support.md

Normal file

|

|

@ -0,0 +1,25 @@

|

|||

Thanks to [RedTopper](https://github.com/RedTopper) for this guide! [#352](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/352)

|

||||

|

||||

|

||||

On an selinux machine like Fedora, append `:z` to the volume mounts. This tells selinux that the files are shared between containers (required for download and your UI of choice to access the same files). *Properly merging :z with an override will work in podman compose 1.0.7, specifically commit https://github.com/containers/podman-compose/commit/0b853f29f44b846bee749e7ae9a5b42679f2649f*

|

||||

|

||||

```yaml

|

||||

x-base_service: &base_service

|

||||

volumes:

|

||||

- &v1 ./data:/data:z

|

||||

- &v2 ./output:/output:z

|

||||

```

|

||||

|

||||

You'll also need to add the runtime and security opts to allow access to your GPU. This can be specified in an override, no new versions required! More information can be found at this RedHat post: [How to enable NVIDIA GPUs in containers](https://www.redhat.com/en/blog/how-use-gpus-containers-bare-metal-rhel-8).

|

||||

|

||||

```yaml

|

||||

x-base_service: &base_service

|

||||

...

|

||||

runtime: nvidia

|

||||

security_opt:

|

||||

- label=type:nvidia_container_t

|

||||

```

|

||||

|

||||

I also had to add `,Z` to the pip/apt caches for them to work. On the first build everything will be fine without the fix, but on the second+ build, you may get a "file not found" when pip goes to install a package from the cache. Here's a script to do this easily, along with more info: https://github.com/RedTopper/Stable-Diffusion-Webui-Podman/blob/podman/selinux-cache.sh.

|

||||

|

||||

Lastly, delete all the services you don't want to use. *Using `--profile` will work in podman compose 1.0.7, specifically commit https://github.com/containers/podman-compose/commit/8d8df0bc2816d8e8fa142781d9018a06fe0d08ed*

|

||||

11

docs/Screenshots.md

Normal file

11

docs/Screenshots.md

Normal file

|

|

@ -0,0 +1,11 @@

|

|||

# AUTOMATIC1111

|

||||

|

||||

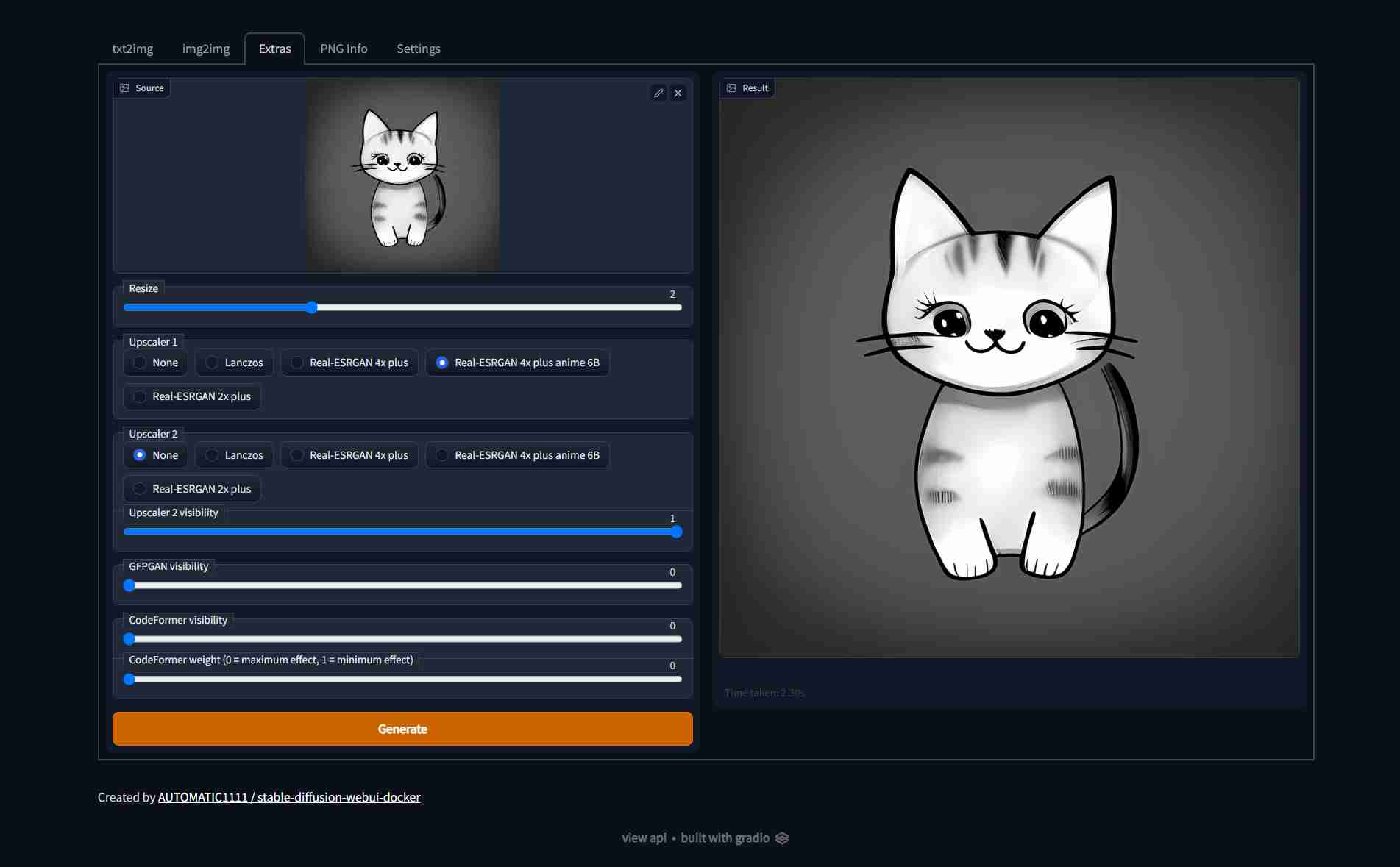

| Text to image | Image to image | Extras |

|

||||

| ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |  |

|

||||

|

||||

# lstein (invokeAI)

|

||||

|

||||

| Text to image | Image to image | Extras |

|

||||

| ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |  |

|

||||

51

docs/Setup.md

Normal file

51

docs/Setup.md

Normal file

|

|

@ -0,0 +1,51 @@

|

|||

# Make sure you have the *latest* version of docker and docker compose installed

|

||||

|

||||

TLDR:

|

||||

|

||||

clone this repo and run:

|

||||

```bash

|

||||

docker compose --profile download up --build

|

||||

# wait until its done, then:

|

||||

docker compose --profile [ui] up --build

|

||||

# where [ui] is one of: auto | auto-cpu | comfy | comfy-cpu

|

||||

```

|

||||

if you don't know which ui to choose, `auto` is good start.

|

||||

|

||||

Then access from http://localhost:7860/

|

||||

|

||||

Unfortunately, AMD GPUs [#63](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/63) and Mac [#35](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues/35) are not supported, contributions to add support are very welcome!!!!!!!!!!

|

||||

|

||||

|

||||

If you face any problems, check the [FAQ page](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/FAQ), or [create a new issue](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues).

|

||||

|

||||

### Detailed Steps

|

||||

|

||||

First of all, clone this repo, you can do this with `git`, or you can download a zip file. Please always use the most up-to-date state from the `master` branch. Even though we have releases, everything is changing and breaking all the time.

|

||||

|

||||

|

||||

After cloning, open a terminal in the folder and run:

|

||||

|

||||

```

|

||||

docker compose --profile download up --build

|

||||

```

|

||||

This will download all of the required models / files, and validate their integrity. You only have to download the data once (regardless of the UI). There are roughly 12GB of data to be downloaded.

|

||||

|

||||

Next, choose which UI you want to run (you can easily change later):

|

||||

- `auto`: The most popular fork, many features with neat UI, [Repo by AUTOMATIC1111](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

|

||||

- `auto-cpu`: for users without a GPU.

|

||||

- `comfy`: A graph based workflow UI, very powerful, [Repo by comfyanonymous](https://github.com/comfyanonymous/ComfyUI)

|

||||

|

||||

|

||||

After the download is done, you can run the UI using:

|

||||

```bash

|

||||

docker compose --profile [ui] up --build

|

||||

# for example:

|

||||

# docker compose --profile invoke up --build

|

||||

# or

|

||||

# docker compose --profile auto up --build

|

||||

```

|

||||

|

||||

Will start the app on http://localhost:7860/. Feel free to try out the different UIs.

|

||||

|

||||

|

||||

Note: the first start will take some time since other models will be downloaded, these will be cached in the `data` folder, so next runs are faster. First time setup might take between 15 minutes and 1 hour depending on your internet connection, other times are much faster, roughly 20 seconds.

|

||||

73

docs/Usage.md

Normal file

73

docs/Usage.md

Normal file

|

|

@ -0,0 +1,73 @@

|

|||

Assuming you [setup everything correctly](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/Setup), you can run any UI (interchangeably, but not in parallel) using the command:

|

||||

```bash

|

||||

docker compose --profile [ui] up --build

|

||||

```

|

||||

where `[ui]` is one of `auto`, `auto-cpu`, `comfy`, or `comfy-cpu`.

|

||||

|

||||

### Mounts

|

||||

|

||||

The `data` and `output` folders are always mounted into the container as `/data` and `/output`, use them so if you want to transfer anything from / to the container.

|

||||

|

||||

### Updates

|

||||

if you want to update to the latest version, just pull the changes

|

||||

```bash

|

||||

git pull

|

||||

```

|

||||

|

||||

You can also checkout specific tags if you want.

|

||||

|

||||

### Customization

|

||||

If you want to customize the behaviour of the uis, you can create a `docker-compose.override.yml` and override whatever you want from the [main `docker-compose.yml` file](https://github.com/AbdBarho/stable-diffusion-webui-docker/blob/master/docker-compose.yml). Example:

|

||||

|

||||

```yml

|

||||

services:

|

||||

auto:

|

||||

environment:

|

||||

- CLI_ARGS=--lowvram

|

||||

```

|

||||

|

||||

Possible configuration:

|

||||

|

||||

# `auto`

|

||||

By default: `--medvram` is given, which allow you to use this model on a 6GB GPU, you can also use `--lowvram` for lower end GPUs. Remove these arguments if you are using a (relatively) high end GPU, like 40XX series cards, as these arguments will slow you down.

|

||||

|

||||

[You can find the full list of cli arguments here.](https://github.com/AUTOMATIC1111/stable-diffusion-webui/blob/master/modules/shared.py)

|

||||

|

||||

### Custom models

|

||||

|

||||

Put the weights in the folder `data/StableDiffusion`, you can then change the model from the settings tab.

|

||||

|

||||

### General Config

|

||||

There is multiple files in `data/config/auto` such as `config.json` and `ui-config.json` which let you which contain additional config for the UI.

|

||||

|

||||

### Scripts

|

||||

put your scripts `data/config/auto/scripts` and restart the container

|

||||

|

||||

### Extensions

|

||||

|

||||

You can use the UI to install extensions, or, you can put your extensions in `data/config/auto/extensions`.

|

||||

|

||||

Different extensions require additional dependencies. Some of them might conflict with each other and changing versions of packages could break things. This container will try to install missing extension dependencies on startup, but it won't resolve any problems for you.

|

||||

|

||||

There is also the option to create a script `data/config/auto/startup.sh` which will be called on container startup, in case you want to install any additional dependencies for your extensions or anything else.

|

||||

|

||||

|

||||

An example of your `startup.sh` might looks like this:

|

||||

```sh

|

||||

#!/bin/bash

|

||||

|

||||

# opencv-python-headless to not rely on opengl and drivers.

|

||||

pip install -q --force-reinstall opencv-python-headless

|

||||

```

|

||||

|

||||

NOTE: dependencies of extensions might get lost when you create a new container, hence the installing them in the startup script is important.

|

||||

|

||||

It is not recommended to modify the Dockerfile for the sole purpose of supporting some extension (unless you truly know what you are doing).

|

||||

|

||||

### **DONT OPEN AN ISSUE IF A SCRIPT OR AN EXTENSION IS NOT WORKING**

|

||||

|

||||

I maintain neither the UI nor the extension, I can't help you.

|

||||

|

||||

|

||||

# `auto-cpu`

|

||||

CPU instance of the above, some stuff might not work, use at your own risk.

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

FROM alpine/git:2.36.2 as download

|

||||

FROM alpine/git:2.36.2 AS download

|

||||

|

||||

COPY clone.sh /clone.sh

|

||||

|

||||

|

|

@ -13,8 +13,8 @@ RUN . /clone.sh clip-interrogator https://github.com/pharmapsychotic/clip-interr

|

|||

RUN . /clone.sh generative-models https://github.com/Stability-AI/generative-models 45c443b316737a4ab6e40413d7794a7f5657c19f

|

||||

RUN . /clone.sh stable-diffusion-webui-assets https://github.com/AUTOMATIC1111/stable-diffusion-webui-assets 6f7db241d2f8ba7457bac5ca9753331f0c266917

|

||||

|

||||

|

||||

FROM pytorch/pytorch:2.3.0-cuda12.1-cudnn8-runtime

|

||||

#FROM pytorch/pytorch:2.7.1-cuda12.8-cudnn9-runtime

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_PREFER_BINARY=1

|

||||

|

||||

|

|

@ -25,14 +25,12 @@ RUN --mount=type=cache,target=/var/cache/apt \

|

|||

# extensions needs those

|

||||

ffmpeg libglfw3-dev libgles2-mesa-dev pkg-config libcairo2 libcairo2-dev build-essential

|

||||

|

||||

|

||||

WORKDIR /

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git && \

|

||||

cd stable-diffusion-webui && \

|

||||

git reset --hard v1.9.4 && \

|

||||

pip install -r requirements_versions.txt

|

||||

|

||||

# git reset --hard v1.9.4 && \

|

||||

pip install -r requirements_versions.txt && pip install --upgrade typing-extensions

|

||||

|

||||

ENV ROOT=/stable-diffusion-webui

|

||||

|

||||

|

|

@ -60,7 +58,8 @@ RUN \

|

|||

|

||||

WORKDIR ${ROOT}

|

||||

ENV NVIDIA_VISIBLE_DEVICES=all

|

||||

ENV CLI_ARGS=""

|

||||

EXPOSE 7860

|

||||

ARG CLI_ARGS=""

|

||||

ENV WEBUI_PORT=7860

|

||||

EXPOSE $WEBUI_PORT

|

||||

ENTRYPOINT ["/docker/entrypoint.sh"]

|

||||

CMD python -u webui.py --listen --port 7860 ${CLI_ARGS}

|

||||

CMD python -u webui.py --listen --port $WEBUI_PORT ${CLI_ARGS}

|

||||

|

|

|

|||

|

|

@ -1,5 +1,5 @@

|

|||

#!/bin/bash

|

||||

|

||||

#set -x

|

||||

set -Eeuo pipefail

|

||||

|

||||

# TODO: move all mkdir -p ?

|

||||

|

|

@ -50,6 +50,7 @@ for to_path in "${!MOUNTS[@]}"; do

|

|||

rm -rf "${to_path}"

|

||||

if [ ! -f "$from_path" ]; then

|

||||

mkdir -vp "$from_path"

|

||||

# mkdir -vp "$from_path" || true

|

||||

fi

|

||||

mkdir -vp "$(dirname "${to_path}")"

|

||||

ln -sT "${from_path}" "${to_path}"

|

||||

|

|

|

|||

|

|

@ -1,22 +1,69 @@

|

|||

FROM pytorch/pytorch:2.3.0-cuda12.1-cudnn8-runtime

|

||||

# Defines the versions of ComfyUI, ComfyUI Manager, and PyTorch to use

|

||||

ARG COMFYUI_VERSION=v0.3.43

|

||||

ARG COMFYUI_MANAGER_VERSION=3.33.3

|

||||

ARG PYTORCH_VERSION=2.7.1-cuda12.8-cudnn9-runtime

|

||||

|

||||

# This image is based on the latest official PyTorch image, because it already contains CUDA, CuDNN, and PyTorch

|

||||

FROM pytorch/pytorch:${PYTORCH_VERSION}

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_PREFER_BINARY=1

|

||||

|

||||

RUN apt-get update && apt-get install -y git && apt-get clean

|

||||

RUN apt update --assume-yes && \

|

||||

apt install --assume-yes \

|

||||

git \

|

||||

sudo \

|

||||

build-essential \

|

||||

libgl1-mesa-glx \

|

||||

libglib2.0-0 \

|

||||

libsm6 \

|

||||

libxext6 \

|

||||

ffmpeg && \

|

||||

apt-get clean && \

|

||||

rm -rf /var/lib/apt/lists/*

|

||||

|

||||

ENV ROOT=/stable-diffusion

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

git clone https://github.com/comfyanonymous/ComfyUI.git ${ROOT} && \

|

||||

cd ${ROOT} && \

|

||||

git checkout master && \

|

||||

git reset --hard 276f8fce9f5a80b500947fb5745a4dde9e84622d && \

|

||||

pip install -r requirements.txt

|

||||

# Clones the ComfyUI repository and checks out the latest release

|

||||

RUN git clone --depth=1 https://github.com/comfyanonymous/ComfyUI.git /opt/comfyui && \

|

||||

cd /opt/comfyui && \

|

||||

git fetch origin ${COMFYUI_VERSION} && \

|

||||

git checkout FETCH_HEAD

|

||||

|

||||

WORKDIR ${ROOT}

|

||||

# Clones the ComfyUI Manager repository and checks out the latest release; ComfyUI Manager is an extension for ComfyUI that enables users to install

|

||||

# custom nodes and download models directly from the ComfyUI interface; instead of installing it to "/opt/comfyui/custom_nodes/ComfyUI-Manager", which

|

||||

# is the directory it is meant to be installed in, it is installed to its own directory; the entrypoint will symlink the directory to the correct

|

||||

# location upon startup; the reason for this is that the ComfyUI Manager must be installed in the same directory that it installs custom nodes to, but

|

||||

# this directory is mounted as a volume, so that the custom nodes are not installed inside of the container and are not lost when the container is

|

||||

# removed; this way, the custom nodes are installed on the host machine

|

||||

RUN git clone --depth=1 https://github.com/Comfy-Org/ComfyUI-Manager.git /opt/comfyui-manager && \

|

||||

cd /opt/comfyui-manager && \

|

||||

git fetch origin ${COMFYUI_MANAGER_VERSION} && \

|

||||

git checkout FETCH_HEAD

|

||||

|

||||

# Installs the required Python packages for both ComfyUI and the ComfyUI Manager

|

||||

RUN pip install \

|

||||

--requirement /opt/comfyui/requirements.txt \

|

||||

--requirement /opt/comfyui-manager/requirements.txt

|

||||

|

||||

RUN pip3 install --no-cache-dir \

|

||||

opencv-python \

|

||||

diffusers \

|

||||

triton \

|

||||

sageattention \

|

||||

psutil

|

||||

|

||||

# Sets the working directory to the ComfyUI directory

|

||||

WORKDIR /opt/comfyui

|

||||

COPY . /docker/

|

||||

RUN chmod u+x /docker/entrypoint.sh && cp /docker/extra_model_paths.yaml ${ROOT}

|

||||

RUN chmod u+x /docker/entrypoint.sh && cp /docker/extra_model_paths.yaml /opt/comfyui

|

||||

|

||||

ENV NVIDIA_VISIBLE_DEVICES=all PYTHONPATH="${PYTHONPATH}:${PWD}" CLI_ARGS=""

|

||||

EXPOSE 7860

|

||||

ENTRYPOINT ["/docker/entrypoint.sh"]

|

||||

CMD python -u main.py --listen --port 7860 ${CLI_ARGS}

|

||||

EXPOSE 7861

|

||||

|

||||

# Adds the startup script to the container; the startup script will create all necessary directories in the models and custom nodes volumes that were

|

||||

# mounted to the container and symlink the ComfyUI Manager to the correct directory; it will also create a user with the same UID and GID as the user

|

||||

# that started the container, so that the files created by the container are owned by the user that started the container and not the root user

|

||||

ENTRYPOINT ["/bin/bash", "/docker/entrypoint.sh"]

|

||||

|

||||

# On startup, ComfyUI is started at its default port; the IP address is changed from localhost to 0.0.0.0, because Docker is only forwarding traffic

|

||||

# to the IP address it assigns to the container, which is unknown at build time; listening to 0.0.0.0 means that ComfyUI listens to all incoming

|

||||

# traffic; the auto-launch feature is disabled, because we do not want (nor is it possible) to open a browser window in a Docker container

|

||||

CMD ["/opt/conda/bin/python", "main.py", "--listen", "0.0.0.0", "--port", "7861", "--disable-auto-launch"]

|

||||

|

|

|

|||

|

|

@ -1,31 +1,71 @@

|

|||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

mkdir -vp /data/config/comfy/custom_nodes

|

||||

|

||||

declare -A MOUNTS

|

||||

|

||||

MOUNTS["/root/.cache"]="/data/.cache"

|

||||

MOUNTS["${ROOT}/input"]="/data/config/comfy/input"

|

||||

MOUNTS["${ROOT}/output"]="/output/comfy"

|

||||

|

||||

for to_path in "${!MOUNTS[@]}"; do

|

||||

set -Eeuo pipefail

|

||||

from_path="${MOUNTS[${to_path}]}"

|

||||

rm -rf "${to_path}"

|

||||

if [ ! -f "$from_path" ]; then

|

||||

mkdir -vp "$from_path"

|

||||

fi

|

||||

mkdir -vp "$(dirname "${to_path}")"

|

||||

ln -sT "${from_path}" "${to_path}"

|

||||

echo Mounted $(basename "${from_path}")

|

||||

# Creates the directories for the models inside of the volume that is mounted from the host

|

||||

echo "Creating directories for models..."

|

||||

MODEL_DIRECTORIES=(

|

||||

"checkpoints"

|

||||

"clip"

|

||||

"clip_vision"

|

||||

"configs"

|

||||

"controlnet"

|

||||

"diffusers"

|

||||

"diffusion_models"

|

||||

"embeddings"

|

||||

"gligen"

|

||||

"hypernetworks"

|

||||

"loras"

|

||||

"photomaker"

|

||||

"style_models"

|

||||

"text_encoders"

|

||||

"unet"

|

||||

"upscale_models"

|

||||

"vae"

|

||||

"vae_approx"

|

||||

)

|

||||

for MODEL_DIRECTORY in ${MODEL_DIRECTORIES[@]}; do

|

||||

mkdir -p /opt/comfyui/models/$MODEL_DIRECTORY

|

||||

done

|

||||

|

||||

if [ -f "/data/config/comfy/startup.sh" ]; then

|

||||

pushd ${ROOT}

|

||||

. /data/config/comfy/startup.sh

|

||||

popd

|

||||

fi

|

||||

# Creates the symlink for the ComfyUI Manager to the custom nodes directory, which is also mounted from the host

|

||||

echo "Creating symlink for ComfyUI Manager..."

|

||||

rm --force /opt/comfyui/custom_nodes/ComfyUI-Manager

|

||||

ln -s \

|

||||

/opt/comfyui-manager \

|

||||

/opt/comfyui/custom_nodes/ComfyUI-Manager

|

||||

|

||||

exec "$@"

|

||||

# The custom nodes that were installed using the ComfyUI Manager may have requirements of their own, which are not installed when the container is

|

||||

# started for the first time; this loops over all custom nodes and installs the requirements of each custom node

|

||||

echo "Installing requirements for custom nodes..."

|

||||

for CUSTOM_NODE_DIRECTORY in /opt/comfyui/custom_nodes/*;

|

||||

do

|

||||

if [ "$CUSTOM_NODE_DIRECTORY" != "/opt/comfyui/custom_nodes/ComfyUI-Manager" ];

|

||||

then

|

||||

if [ -f "$CUSTOM_NODE_DIRECTORY/requirements.txt" ];

|

||||

then

|

||||

CUSTOM_NODE_NAME=${CUSTOM_NODE_DIRECTORY##*/}

|

||||

CUSTOM_NODE_NAME=${CUSTOM_NODE_NAME//[-_]/ }

|

||||

echo "Installing requirements for $CUSTOM_NODE_NAME..."

|

||||

pip install --requirement "$CUSTOM_NODE_DIRECTORY/requirements.txt"

|

||||

fi

|

||||

fi

|

||||

done

|

||||

|

||||

# Under normal circumstances, the container would be run as the root user, which is not ideal, because the files that are created by the container in

|

||||

# the volumes mounted from the host, i.e., custom nodes and models downloaded by the ComfyUI Manager, are owned by the root user; the user can specify

|

||||

# the user ID and group ID of the host user as environment variables when starting the container; if these environment variables are set, a non-root

|

||||

# user with the specified user ID and group ID is created, and the container is run as this user

|

||||

if [ -z "$USER_ID" ] || [ -z "$GROUP_ID" ];

|

||||

then

|

||||

echo "Running container as $USER..."

|

||||

exec "$@"

|

||||

else

|

||||

echo "Creating non-root user..."

|

||||

getent group $GROUP_ID > /dev/null 2>&1 || groupadd --gid $GROUP_ID comfyui-user

|

||||

id -u $USER_ID > /dev/null 2>&1 || useradd --uid $USER_ID --gid $GROUP_ID --create-home comfyui-user

|

||||

chown --recursive $USER_ID:$GROUP_ID /opt/comfyui

|

||||

chown --recursive $USER_ID:$GROUP_ID /opt/comfyui-manager

|

||||

export PATH=$PATH:/home/comfyui-user/.local/bin

|

||||

|

||||

echo "Running container as $USER..."

|

||||

sudo --set-home --preserve-env=PATH --user \#$USER_ID "$@"

|

||||

fi

|

||||

|

|

|

|||

|

|

@ -1,25 +1,33 @@

|

|||

a111:

|

||||

base_path: /data

|

||||

base_path: /opt/comfyui

|

||||

# base_path: /data

|

||||

|

||||

checkpoints: models/Stable-diffusion

|

||||

configs: models/Stable-diffusion

|

||||

configs: models/configs

|

||||

vae: models/VAE

|

||||

loras: models/Lora

|

||||

loras: |

|

||||

models/Lora

|

||||

models/loras

|

||||

hypernetworks: models/hypernetworks

|

||||

controlnet: models/controlnet

|

||||

gligen: models/GLIGEN

|

||||

clip: models/CLIPEncoder

|

||||

embeddings: embeddings

|

||||

|

||||

unet: models/unet

|

||||

upscale_models: |

|

||||

models/RealESRGAN

|

||||

models/ESRGAN

|

||||

models/SwinIR

|

||||

models/GFPGAN

|

||||

hypernetworks: models/hypernetworks

|

||||

controlnet: models/ControlNet

|

||||

gligen: models/GLIGEN

|

||||

clip: models/CLIPEncoder

|

||||

embeddings: embeddings

|

||||

|

||||

custom_nodes: config/comfy/custom_nodes

|

||||

models/upscale_models

|

||||

diffusion_models: models/diffusion_models

|

||||

text_encoders: models/text_encoders

|

||||

clip_vision: models/clip_vision

|

||||

custom_nodes: /opt/comfyui/custom_nodes

|

||||

# custom_nodes: config/comfy/custom_nodes

|

||||

|

||||

# TODO: I am unsure about these, need more testing

|

||||

# style_models: config/comfy/style_models

|

||||

# t2i_adapter: config/comfy/t2i_adapter

|

||||

# clip_vision: config/comfy/clip_vision

|

||||

# diffusers: config/comfy/diffusers

|

||||

|

|

|

|||

Loading…

Reference in a new issue